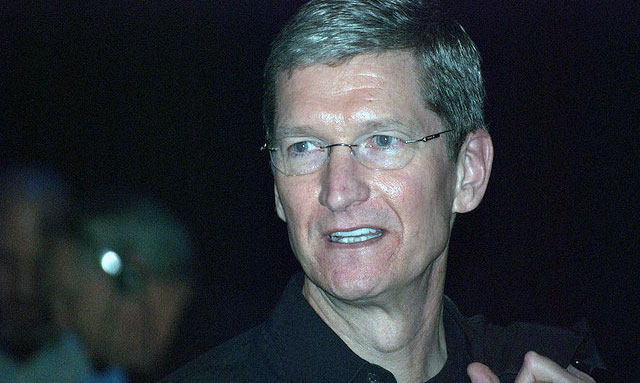

Apple has been ordered to help FBI investigators access data on the phone belonging to San Bernardino gunman Syed Rizwan Farook. The technical solution proposed by the FBI appears to undermine Apple’s earlier claim that they would be unable to help. However, in a strongly worded reply, Apple CEO Tim Cook has indicated that Apple is unwilling to comply with this order, as it would do irreparable damage to all iPhone owners’ security and privacy.

On newer Apple phones like Farook’s (an iPhone 5c, running iOS 9 according to the court motion), data stored on the phone is protected by encryption, using the passcode (which is also used for unlocking the phone) as part of the key. (This is a different issue from “end-to-end encryption”, which concerns iMessages when they are in transit between phones.)

Apple recently claimed that they were unable to decrypt such information at all, as they do not have the passcode. This is also the line it takes in its policy statement on providing information to governments, first posted in May 2014.

Blocking brute force

In the court order, the FBI is undermining this claim. The FBI claims that Apple can write and run software that can help discover the passcode and access Farook’s data. The software should switch off security features that currently prevent a “brute force attack” — trying all possible passcodes — which should take little time if the passcode is “numerical” as claimed by the FBI.

One of these security features is an enforced increasing delay between repeated passcode attempts, which would make brute force attempts take too excessive a time. The other defence against brute force is auto-erasure: if this is switched on (as appears likely), after 10 failed attempts, the data on the phone is effectively erased.

Finally, to enable automation of the brute force attack, the FBI is asking for a method to enter passcodes electronically. With all of this, the FBI has been careful to point out that it would not be attempting to break encryption — but merely asking Apple to remove security measures that get in the way of the FBI discovering the key.

A message to its customers

In its response, Apple has not gone down the road of claiming that the suggested approach will not work. This may be an indication that it could actually work, but also a deliberate choice to focus the argument elsewhere. Crucially, Cook’s response appears targeted at the public: it’s headed “A Message to Our Customers”. This is in line with Apple’s general marketing, which emphasises privacy as a selling point.

Cook stresses how dangerous the proposed software would be for personal security. iPhone encryption now protects all sorts of important personal data, and once software like this exists, it could end up in the wrong hands or be used for much wider purposes by governments. Essentially, it would weaken encryption permanently for Apple’s customers.

Cook also makes it clear that Apple has no sympathy with terrorists — but points out they will always be able to find more secure methods if Apple’s security is weakened. The FBI’s argument that the software would be less risky by only being built for the specific phone is also quickly dismissed.

Making a stand

Cook makes a strong stand against this and other so-called backdoor methods of accessing a phone’s data. He argues that companies should not be asked to systematically undermine the security they build into their products.

This echoes Apple’s recently submitted evidence on the UK draft Investigatory Powers Bill. There, Apple strongly resisted being asked to assist in “bulk equipment interference” and “removal of electronic protection”, either of which could allow UK intelligence and law enforcement to make requests very similar to the FBI’s. IT lawyer Neil Brown even suggested that the UK has a similar law to the 1789 All Writs Act invoked to justify this US case.

The nominal audience may have been Apple’s customers, but really Cook chose to make a stand against governments. The argument that encryption is a necessary component of security and privacy in a modern society appeared to be won already. However, companies cannot meaningfully offer security and privacy measures to their customers if they are simultaneously forced to subvert them for governments, with all the risks involved.

Apple is demanding the right to operate in the market it is carving out for itself. We may feel the company has common sense on their side, but it is clear that the battle over the legal position is far from over.![]()

- Eerke Boiten is senior lecturer, School of Computing and director of Academic Centre of Excellence in Cyber Security Research, University of Kent

- This article was originally published on The Conversation